Keywords

Minimally-verbal; Autism*speech generating devices; Language activity monitors; Preferences

Introduction

How do you find the sound of your own voice when you hear it played back to you from a wedding video or some other form of audio or visual recording? Do you find it somewhat unfamiliar? Does it lack the usual pitch and tone that you are used to hearing when you speak ordinarily? Is it a voice you would prefer to communicate with on a speech-operated device if you lost your own voice temporarily or in the long term? These are some of the questions which form the subject matter of this study.

We ask if a SGD with a recording of the child’s own voice will create a sense of ‘owness for communication’ so that the frequency and richness of AAC use will be enhanced [1]. We are not alone in asking this question. A number of research projects are coalescing to develop technology that performs a voice transplant of the child's natural voice onto the AAC device to explore this very concept [1,2]. In addition, a recent review of AAC use by the child with autism point to an increasing need for experimental research in order to examine children’s preferences for different speech output options on their SGDs [3].

This study is an examination of children’s preferences for voice type on SGDs. Twelve minimally-verbal children with lowfunctioning autism (LFA) were allocated SGDs programmed with a set library of 81 words and phrases (see Appendix A) for 12 weeks. These words and phrases were selected following consultation with the parents, teachers, and speech and language therapists of the 12 children, and closely reflected the typical requests and needs of this small group. Significantly, the words and phrases on the SGDs were available in three different voice types, namely, a male and a female digitised speech output and a recording of the child’s own voice. This study had three main aims: First, to measure the child’s use of this new form of communication tool over the duration of the test period. Second, it was of measure whether this form of communication device would enhance the frequency of the child’s communication, and third, to assess the child’s preferential use of voice-type when using the SGD over the course of 12 weeks.

Method

Participants

Twelve minimally-verbal children were initially recruited for this part of the study (m=8; f=4). These children presented with a mean chronological age (CA) (m=11.5, SD=3.0) and non-verbal mental age (NVMA) (m=5.0, SD=2.2). Reports on the children from the National Educational Psychologists (NEPS) confirmed that all 12 children were minimally-verbal which is commonly defined as ‘speaking fewer than 10 words’ (Koegel, Shirotova and Koegel) [4]. In terms of augmentative and alternative communication (AAC) use, the children ranged in their mastery of PECS as a communication tool from no mastery at all to level 5 [5]. Just six of the twelve children used sign language regularly on a daily basis with the number of signs used ranging from 10- 15 per child. Jake used no functional speech at all, while the remaining 11 children used between 2-10 words per day. Finally, these 12 children understood between 8-34 words at the time of testing (Table 1).

| Name |

Sex |

School |

CA |

NVMA |

IQ |

CARS |

Level of PECs mastered |

No. of words used per day |

No of signs used per day |

| Jim |

M |

D |

11.4 |

4.8 |

42.3 |

36 |

4 |

4 |

10 |

| Jake |

M |

D |

7.2 |

3.1 |

43.6 |

31 |

0 |

0 |

10 |

| Robbie* |

M |

A |

14.0 |

|

|

36 |

2 |

2 |

0 |

| Nita |

F |

A |

13.2 |

8.5 |

64.7 |

38 |

4 |

4 |

0 |

| Bob |

M |

A |

15.4 |

3.7 |

24.3 |

51 |

4 |

4 |

0 |

| John |

M |

A |

8.0 |

5.1 |

63.9 |

36 |

4 |

5 |

0 |

| Mark |

M |

A |

15.0 |

6.1 |

40.8 |

48 |

4 |

4 |

0 |

| Sandra |

F |

A |

10.7 |

5.9 |

55.0 |

42 |

4 |

4 |

14 |

| Connie |

F |

A |

11.3 |

4.9 |

43.3 |

34 |

4 |

4 |

10 |

| Francis |

M |

D |

10.5 |

5.5 |

51.9 |

36 |

2 |

2 |

15 |

| Jason |

M |

D |

6.5 |

3.2 |

50.0 |

31 |

5 |

5 |

0 |

| Linda |

F |

A |

14.3 |

7.8 |

54.6 |

36 |

4 |

10 |

4 |

*It was not possible to calculate the NVMA of this child via the BPVS

Table 1: Descriptive data for the 12 children recruited.

Informed written consent was sought from parents and guardians via each child’s school prior to any child being allocated a SGD. In line with Stake (1995: 58) indication was given as to why their child was selected for this part of the study, and every effort was made to explain that the SGDs were not allocated to ‘solve a problem or advance social well-being’ but to add to existing knowledge about the preferences children with autism may exhibit for certain features on AAC tools. A brief written description of the intended casework was offered and plans to anonymise the identities of the children, their teachers, parents and therapists were also clearly articulated [6,7].

Materials

The SGDS

The device chosen for this study was the Logan ProxTalker. This device has been defined as ‘an electronic communication aide that produces digitised or synthesised speech upon activation by individuals with little to no functional speech’ [8,9]. Each device measured at 12.9 inches x 7 inches x 3.5 inches and with batteries inserted they each weighed at 4.7 lbs (https:// www.loganproxtalker.com). This device closely followed the principles of PECS [8] and as PECS was already used by 11 of the 12 participants, it was anticipated that this might enhance the children’s adaptation to and use of this particular SGD. Few comparative efficacy studies exist when making specific AAC choices for research purposes but there is evidence to suggest that children with autism who can use PECS adjust well to making requests via SGDs [8,10,11].

Each Logan ProxTalker came equipped with three sets of 81 picture cards. Each picture card was printed in colour and measured 1.25 inches x 1.25 inches. The cards in each set corresponded to a list of 81 words and phrases stored onto the SGD. The 81 words/ phrases were selected following consultation with the parents, teachers, and speech and language therapists of the participants and closely reflected the typical items, people and needs of the participants. When a picture card was placed on any of the five buttons, the device emitted a digitised speech output which corresponded to the word/phrase depicted on that card

Set 1 emitted a male digitised voice when placed on the SGD while Set 2 emitted a female digitised voice. The cards in Set 1 had blue borders to distinguish them from Set 2 which had pink borders. Set 3 had a yellow border and they would emit the recorded voice of the child. The SGD had a number of felt storage pages to which the picture cards could be adhered to via a Velcro circle on the back of each card. The number of cards displayed on the cards could be decided on by the parents or teachers of each participant. Those not in use could be stored in clear plastic bags with a zip lock which accompanied the SGD.

The programming keys: Each SGD was equipped with a set of programming keys (Figure 1). By placing the programming keys over any of the five buttons on the SGD a range of functions could be performed. For instance the ‘Diagnostics’ key was used to diagnose any technical faults with the device. The ‘Check Battery’ key allowed the SGD user to know how much battery power remained on the unit. There was a key to increase the volume on the device and one to decrease the volume. Possibly the two most relevant keys to this research were the ‘erase’ and ‘record’ keys.

Figure 1: A graph depicting Sandra's use of the SGD over the 12-week study.

On placing the ‘record’ key on any of the five buttons up to 30 seconds of the child’s own voice could be recorded onto the device. This feature allowed a library of the 81 words to be recorded onto the SGD as the self-voice speech output option. To record the child’s voice, a parent or teacher needed to sound out the words in order for the child to mimic them. Later the adult’s voice was edited from the voice recording. If a mistake was made, placing the erase key on any of the five buttons with the relevant picture card meant that that recording could be erased and a fresh recording could occur.

As well as having coloured borders, each picture card had its own unique code. Picture cards with the male digitised voice started with the digits 0x44 while picture cards with the female digitised voice began with the digits 0x04. The code for the picture cards used for recording the child’s voice was 0x00. Each of the SGDs was fitted with a language activity monitor (LAM) designed to record these codes and other factors (https://www.aacinstitute. org).

The Language Activity Monitoring device: LAM software phone. It is built into the SGD/AAC device and it automatically records every utterance or ‘communication functions like a memory card in a typical mobile event’ an individual makes when using a communication device along with the exact time the utterance occurred [12,13]. Importantly, this piece of software works differently depending on which language representation model (LRM) is programmed on the speech generating device.

A LRM refers to the particular communication method or mode on a communication device and typically include Semantic Compaction™, alphabetic-based methods, and single-meaning pictures [12]. Semantic Compaction™ can be defined as ‘using short sequences of symbols to represent words or phrases that follow specific rules to indicate morphology and enhance vocabulary organisation’ (Todd, 2008: 14). The alphabetic-based LRM uses a keyboard (much like a keyboard on a typical mobile phone when texting) to spell out the word(s) [9,12,14]. The LRM method termed ‘single meaning pictures (SMP)’ uses pictures (such as PECS) to represent a single word such as ‘walk’ or singular phases, such as ‘I want’ or ‘I see’ [12]. The LAM software records the LRM used to produce a language event in a language sample [12].

For a SGD using single-meaning pictures (SMP), the data produced can be quite extensive and is presented as lines of codes. These codes represent the picture tags placed on each of the buttons of the SGD by the child. The first task is to convert these codes into the actual message communicated by the child. The list of codes corresponds to the list of words and phrases stored in each SGD. A code is entered into a software package on your computer as a search, and on finding it, the code is replaced with the appropriate word or phrase. For example, the code 0x44001474 corresponds to the male digitised word saying ‘afraid’ while this word uttered via the female digitised speech output is represented as 0x0400149. Working this way, each code is systematically replaced with words and phrases until the transcript is completed. An example of a communication event made via a single meaning picture (SMP) LRM recorded by LAM software would look as follows (Supplementary Table):

10:46:18 SMP “I want”

10:46:23 SMP “to go”

10:46:25 SMP “home”

10:46:28 SMP “please”

The example communication event above shows the production of the utterance “I want to go home please.” The entire sentence was created using four single meaning pictures (SMP) as they were placed on the voice-activating buttons on the SGD. Each communication event produced via this LRM shows the time associated with it making it possible to see that the entire utterance took ten seconds in total. For the purpose of this study, a number of customised SGDs using the LRM method singlemeaning pictures (SMP) were used. Also for the purpose of recording all communication events from the SGD each device was programmed with a 24-hour clock.

The design of the study

This was a semi-longitudinal study with 12 children with LFA who were allocated a SGD over a period of 12 weeks. The longitudinal approach allowed the children time to express their continued interest in using this new form of augmentative and alternative communication (AAC) as well as their preferences for speech output options when communicating. Based on the LAM data downloaded from the devices, a preliminary quantitative analysis could be conducted followed by a case study approach. The quantitative analysis would show the duration of the child’s use of the SGD over the 12 weeks, the number of their weekly communications, and the function of their communications. It would also be possible to measure the proportionate use of the self-voice speech output option compared to their use of the equally available digitised speech output option during the 12 weeks. The case study approach would permit a more in-depth analysis of any similarities and differences in the use of the SGD by the children (Stake and Yin) [6,7].

Our objectives were threefold as we were interested in (1) whether or not the child would use the device for the duration of the study. We were also interested in (2) how a personalised SGD might affect their rate or quality of communication. Most importantly, we want (3) to investigate the child’s preference for the self-voice speech output option on the SGD for communication purposes.

General procedural points

All initial procedures to introduce the participant to the SGD, to train them in the use of the SGD, and to obtain baseline data on each participant’s current level of communication style, occurred in the child’s school during school hours.

Once every four weeks the schools of the children were visited by the tester and the LAM was removed from each of the SGDs. This resembled a USB card and could be placed into the computer of the tester whereby the data was uploaded into a file for later analysis. This process took no more than 5 minutes per device. Any parental reports that were available were collected on this date also.

Procedures

A demonstration of the SGDs operating procedures was delivered to staff, parents and children in the schools. Next, the tester allocated the devices to each child and a preference assessment was conducted.

It was considered important to introduce each participant to the SGD to be used in the study. By doing so, it Preference assessment would be possible to see if the child was able to adapt to using this particular device and if they were happy to continue in the research. Research suggests that when introducing the child with autism to alternative and augmentative communication (AAC) such as PECS, a preference assessment is typically considered a critical step on the way to teaching the child to use the system [5,8,15]. This ‘critical step’ was recently adapted and used successfully by researchers when introducing a SGD using the single meaning pictures (SMP) as a LRM to 3 children with autism [8].

A training day was organised at the schools of the children and a stimulus preference assessment was conducted [8]. The assessment of preferred stimuli refers to the identification of items or events for which a child will normally work to gain access [16]. For example in one instance, teaching staff identified access to the playground as a strong reinforcer for a particular child, access to a segment of a favourite piece of music as a second reinforcer, with two food items identified as third and fourth preferred items for this child. These four items were then used to teach the child to make requests using the SGD.

The SGDs were placed on the desk of each participant in their respective classrooms. Only the picture cards corresponding to the four reinforces were left on the communication device. These four picture cards were also restricted to the digitised speech output corresponding to the gender of the child using the device (e.g., a male child used an SGD with a digitised male speech output only, while a female child used a SGD with a digitised female speech output only). Male cards were highlighted with a blue outline while a pink outline framed the female cards.

On completion of a school task, the child’s special needs assistant (SNA) encouraged the child to point to the picture of the activity or item they wanted as their ‘reward’ or ‘reinforcer’. The SNA prompted the child to place the tag (i.e., playground) on one of the five buttons on the SGD and when the device generating the word ’’playground’ the child was given instant access to that reinforcer. Over the course of the morning, prompting by the SNA was faded and when the child made three consecutive unaided requests for reinforcers it was deemed that the child understood making one-word requests via the SGD. No child failed this criterion.

For the next step, picture cards saying ‘I want’ were placed on the SGD. On this occasion, when the child had completed a school task, the SNA prompted the child to place this tag on the first button followed by the tag of their choice (i.e., computer) to create a two-part sentence ‘I want computer’ whereby the child was given instant access to that reinforcer. Over the course of the training period, prompting was again faded. If the child ‘forgot’ to place the ‘I want’ tag on the device the SNA removed the card for ‘computer’ and showed the child how to use the two cards together to create a two-part sentence. When the child completed three consecutive requests using two picture cards the next step was introduced.

This third step involved placing cards corresponding to the child’s more typical communication needs onto the SGD. Generally these were tags for ‘mammy’, ‘daddy’, ‘toilet’, ‘stop’, and ‘home.’ Previous research indicates that children acquainted with PECS typically adapt quickly to using this type of SGD [8,15] and this finding was supported during this stage of the present study Training in the general use of the SGD therefore took no more than two hours per child.

The final step in this training day involved introducing the children to the two digitised speech output options available on the SGD. In order to complete this step the device was again limited to just four picture cards. Two of these cards emitted the words ‘I see’ and the other two emitted the word ‘teacher’ when placed on the buttons of the SGD but one had a male voice and the other a female. The child was shown that placing blue edged picture cards created a male speech output while pink edged cards created a female speech output. The blue edged cards were placed on the SGD and the tester/SNA said ‘That sounds like a boy’. This procedure was repeated using the pink edged cards as an example of how to activate the female digitised speech output option.

The tester then pointed to the child’s teacher and asked the child “Who do you see?” If the child was a girl she was prompted to place the pink-edged card for ‘teacher’ in a female voice output on the SGD. If the child was a boy they were prompted to place the blue edged card for ‘teacher’ on the SGD. When the child chose the cards reflecting their own gender they were praised verbally and given access to a small reinforcer such as a sweet. If the child made an incorrect choice or chose one card in the male voice and the second in the female voice the tester said ‘I think it must be this one’ and showed the child which cards created the speech output that best reflected their own gender. When the children used the voice representative of their own gender on three consecutive occasions without prompting or tangible reward (e.g., a small sweet or clapping) it was accepted that the child knew the voice output which related to their own gender. No child failed this section of training which took less than one hour for each child.

A Trial Period with the SGD: Once it was established that the 12 participants could use the SGDs the next step involved allocating each child the device for a trial period. The rationale for this trial period was twofold. First, it controlled for any novelty effect that might occur as a result of using a SGD for the first time that might skew the data (Emms). Second it allowed both parents and participants a cooling off period during which they could withdraw from the study if they felt it might not be for them [17].

Over this two week period parents and teaching staff were encouraged to record the child’s voice on to the SGD using the set of programming keys. In most instances recording the children meant an adult sounding out the words in order for the child to echo them and the adult’s voice was subsequently edited from the voice recording. Using this method, the child could be recorded saying each of the 81 words corresponding to the picture cards which accompanied the SGD. This way a library of vocabulary was set up on the SGD.

If the 81 words and phrases did not match the needs of the child

While the library of words and phrases stored on the devices had been constructed in consultation with parents and teachers, there were occasions where a parent or teacher felt a particular child might prefer to use words/phases more like those they heard at home or at school. For instance, two of the 81 picture cards referred to the ‘computer’ and the ‘playground.’ The parent of one child said she would not typically use those terms when addressing her son. Instead she asked her son if he wanted to play with the ‘pooter’ or to ‘go to the swings’. As these ‘replacement’ words retained the meaning of the original picture cards, and thus the LAM could record their use correctly, it was considered acceptable to record these versions instead. It was made clear to parents and teachers however that totally novel words were not to be recorded over the library of the original 81 cards.

If the 81 standard words and phrases was insufficient

There was also the finding that the 81 words provided were not sufficient for all parents, teachers and children. For example, three parents reported that their children suffered from ear infections on a regular basis and they wanted picture cards capable of saying ‘doctor’ ‘pain’ or ‘Calpol’ on their children’s devices. To accommodate this, a set of thirty blank picture cards was provided with each SGD. Each of these cards could hold up to thirty seconds of recorded speech and each had a code printed clearly on the back. These codes ranged from 0x01 to 0x030. Parents and caregivers were asked to take a note of what words/ phrases were recorded onto any of these blank tags making sure to correlate the recordings with the codes on the back of each blank card. To encourage this behaviour, a predesigned table with the codes of the blank tags clearly marked down the left hand side and spaces to log what was recorded on each tag was included.

At the end of this two week trial period, a young girl called Linda (Table 1) had completely abandoned the SGD and reverted to using PECs. It is difficult to be sure why Linda rejected the device. It could be that the device simply ceased to ‘interest’ her after the first few days. Alternatively, following almost 12 years of using PECs and Lámh, she had a learned behaviour already in place for effective communication, making it difficult for her to adapt to this new communication mode. Eleven of the twelve originally recruited children were operating the SGDs and progressed to the semi-longitudinal phase of the study [18].

Results

Quantitative analysis

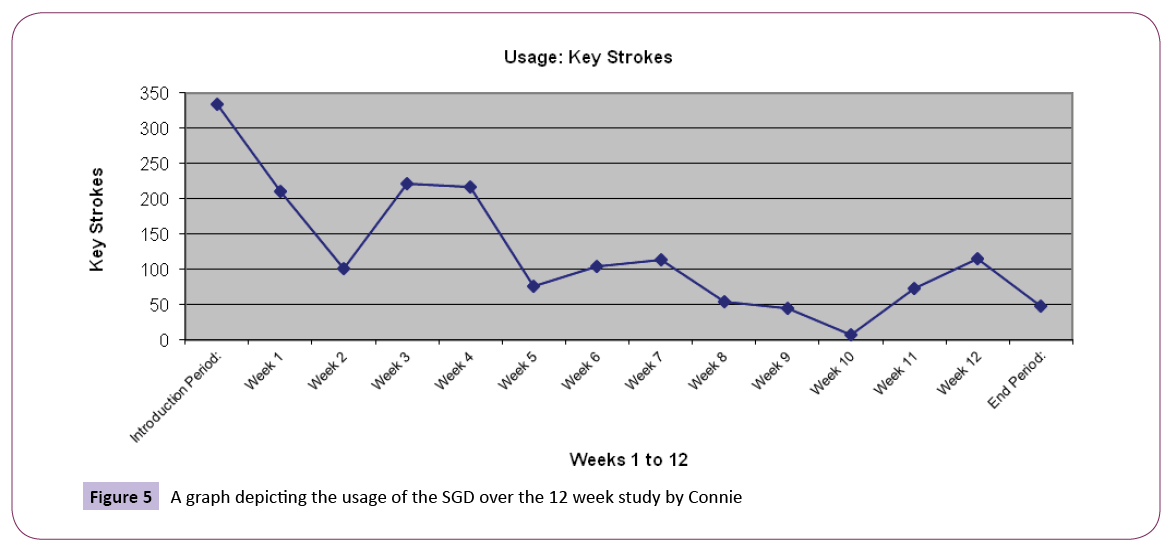

The findings from the LAM data were first examined to investigate the children’s individual use of the SGDs over the duration of the study. This process involved measuring each communication event made via the SGD by a child. A communication event can be defined as a child placing a single-meaning picture (SMP) on a button to create an utterance via the SGD. This measure revealed that over 12 weeks the 11 participants had made an average of 2923.4 communication events (SD=5144.1), ranging from 131 to 18207 per week. The boys had made less communications (m=1391.3, SD=1034.2) than the girls (m=7008.6, SD=9702.9) and children from School A (n=7) had exhibited more communication events (m=3952, SD=6331.8) than those attending School D (n=7, m=1122.7, SD=1109.3). The data also revealed that five children had stopped using the SGDs by week 6. Over this time the overall communication events of these 5 children (m=1071.0, SD=643.1) ranged from 131 to 1716 per week.

A series of three Mann-Whitney U tests were conducted to investigate whether factors such as the children’s IQ, NVMA, or CA influenced their decision to reject or keep the device. The first revealed no significant difference in the IQ scores of those children who left the study by week 6 (Md=44, n=5) and those who continued (Md=42, n=6), U=13, z=-.36, r=0.1. In addition, there was no significant difference in the NVMA of those who left (Md=4 years, n=5) and those who remained (Md=4 years, n=6), U=11, z=-.73, p=.46, r=-0.2. Finally, there was no significant difference in the CA of those who rejected the devices (Md=10 years, n=5) and those who remained (Md=11, n =6), U=12, z=-.54, p=.58, r=-0.1 (Table 2).

| Id No. |

Name |

Sex |

School |

CA yrs |

NVMA yrs |

IQ |

T1 Max16 |

T2 Max20 |

T3 Max20 |

T4 Max2 |

T5 Max10 |

% of use of self-voice |

| 2 |

Jim |

M |

D |

11.4 |

4.8 |

42.3 |

** |

19 |

*** |

2 |

10 |

40 |

| 17 |

Robbie |

M |

A |

14.0 |

* |

|

16 |

8 |

18 |

2 |

10 |

22 |

| 19 |

Nita |

F |

A |

13.2 |

8.5 |

64.7 |

16 |

**** |

20 |

2 |

10 |

24 |

| 29 |

Sandra |

F |

A |

10.7 |

5.9 |

55.0 |

16 |

6 |

19 |

2 |

10 |

2 |

| 33 |

Connie |

F |

A |

11.3 |

4.9 |

43.3 |

16 |

20 |

20 |

2 |

7 |

79 |

| 7 |

Francis |

M |

D |

10.5 |

5.5 |

51.9 |

10 |

10 |

18 |

1 |

10 |

28 |

All names shown are pseudonyms. CA refers to chronological age. NVMA refers to non-verbal mental age. IQ is calculated via MA/CA X 100. T1-5 refers to Tests 1-5 whereby T1=Test of Familiarity. T2 =Test of Recollection. T3 =Voice-Face Matching. T4=Self Voice Recog. T5 =Pure Voice Recog.

* It was not possible to calculate Robbie’s NVMA via the BPVS.

** Jim was distressed on the day of testing for T1.

*** Jim scored below chance on T3.

**** Nita was distressed on the day of testing for T2.

Table 2: Descriptive data for the 6 children who continued participating in the study after week 6.

Next, the children’s preference for communicating with the SGDs via the self-voice speech output option was measured. The data showed that the children (n=11) used the digitised speech output option more than 60% of the time. The girls (n =3) demonstrated a greater proportional use of their own recorded voice (m=35.6%, SD=38.5) over that of any other voice-type available on the SGD compared to the 8 boys (m=21%, SD=13.5) but a Mann-Whitney U test revealed no statistically significant difference between the use of self-voice by boys (Md=20, n=8) and girls (Md=23, n=3), U=11, z=-.20, p=.83, r=-0.06. There was no significant difference in the use of self-voice by the 5 children who stopped using the devices in the first half of the study (Md=190, n=5) and those who continued for the duration (Md=565, n=4), U=5.0, z=-1.8, p=.06, r=-0.5.

Finally for this section, the 11 children had previously been assessed in relation to recognition memory and the data showed that their use of self-voice on the SGDs was significantly correlated with their performance on a Test of Familiarity (r - .57, n=11, p=.06), with greater use of self-voice associated with children who achieved higher scores on the test of familiarity. There was a very small correlation between the use of self-voice and children’s performance on a Test of Recollection (r=.03, n=11, p =.91) implying a greater use of self-voice by children who not only recognised the voice as familiar, but recognised the source as that of their own (Table 3).

| Name |

Sex |

School |

CA |

NVMA |

Score from Study1** |

Score from Study2*** |

Number of communications made via the self-voice option |

The % of all communications made via the self-voice option |

| Connie |

F |

A |

11.3 |

4.9 |

16 |

20 |

1334 |

79 |

| Jim |

M |

D |

11.4 |

4.8 |

|

19 |

919 |

40 |

| Robbie* |

M |

A |

14.0 |

|

16 |

8 |

681 |

22 |

| Sandra |

F |

A |

10.7 |

5.9 |

16 |

6 |

449 |

2 |

| Mark |

M |

A |

15.0 |

6.1 |

16 |

11 |

288 |

34 |

| Nita** |

F |

A |

13.2 |

8.5 |

16 |

|

264 |

24 |

| John |

M |

A |

8.0 |

5.1 |

12 |

9 |

252 |

27 |

| Jason |

M |

D |

6.5 |

3.2 |

11 |

12 |

190 |

11 |

| Bob |

M |

A |

15.4 |

3.7 |

16 |

16 |

177 |

11 |

| Francis |

M |

D |

10.5 |

5.5 |

10 |

10 |

69 |

28 |

| Jake |

M |

D |

7.2 |

3.1 |

12 |

15 |

18 |

16 |

*It was not possible to calculate Robbie’s NVMA via the BPVS

**The recorded voice on Nita’s device was that of her aunt and not her own voice;

** refers to A Test of Familiarity where the max score was 16;

***refers to a Test of Recollection where the max score was 20.

Table 3: Descriptive data showing the children’s use of the self-voice speech output option on the SGDs.

A case study approach

This was an investigation of how a SGD with a self-voice speech output option was used by 12 children with autism over a 12- week period. Specifically we were interested in (1) the child’s use of this communication device per week, (2) the frequency of the child’s communications via the SGD per week, and (3) the child’s preference for the self-voice speech output option when communicating over the 12 weeks.

One of the first findings from quantitative analysis was that some children’s use of the SGDs stopped before the end of the first half of the study. The data could shed no light on the reasons why these children stopped using the devices instead it revealed no statistical differences in CA, NVMA, or IQ scores between the five children who rejected the SGD by week 6 and the six children who used the device for the duration.

The LAM data was useful in highlighting the number of communication events made by each child, and for highlighting the number of communication events made by boys as opposed to girls, and between schools, as well as between the children who left the study by week 6 and those who did not. The LAM data was also a valuable means of calculating the number of times each child chose to communicate via a digitised speech output and the number of times they made utterances using their own voice recorded onto the device.

What we could not fully decipher purely via quantitative analysis was equally significant however. For instance, why did five boys stop using these devices? If not on the grounds of CA, NVMA or IQ, what factors did form the basis of their decision? In addition, while we can see that all 11 children made communication events using the SGDs, what was the function of these communications?

It is suggested that a case study approach can often provide a more indepth analysis of a single case [6,7]. Yin notes that there are six different types of case study, while Stake (1995: pp xi - xii) does not pay much attention to ‘quantitative case studies that emphasise a battery of measurements of the case’ but rather presents an approach which is more qualitative and which emphasises ‘episodes of nuance, the sequentiality of happenings in context, the wholeness of the individual.

This study will now present 11 case studies presented in two sections. Section 1 will discuss the five children who rejected the SGDs and Section 2 will focus on the six who used the devices for the duration. Both sections will draw from observational notes on the children gathered during the collection of baseline data, and from secondary data such as the children’s ABLLS-R and CARS reports, parental surveys, and reports from teachers, parents and speech and language therapists [7]. The central objective of this approach is to better determine a research design, data collection method and procedures for the selection of participants for any subsequent studies investigating preferences for children’s’ speech output options on SGDs.

Section 1

The children who rejected the SGDs

JASON was a six-year old boy who had attended his current school for just over one year. He was the younger of two children and his older brother also had a diagnosis of autism. Jason communicated using a combination of eye gaze, pointing, and via a vocabulary of approximately five words (e.g., mammy, daddy, car, yes, and stop). He had been using PECS as a mode of communication since he was five years old and he was currently operating at phase 5 level which meant he could spontaneously request a variety of items and could answer the question ‘What do you want?’ [5].

Jason was recruited for the longitudinal study when three of the children from the target group dropped out. He adapted well to the introduction phase of the study and he was using the device to request items prior-to and during the fourteen day trial. Both his parents and his teachers reported that during this period, he never used his PECS, preferring instead to use the SGD.

Jason rejected the SGD at week 4. During this time he made a total of 1717 communication events (defined here as a child placing a single-meaning picture (SMP) on a button to create an utterance via the SGD) ranging from 1-1357 per week. The majority of his communications (80%) occurred in the first two weeks of the study and he used the male digitised speech output for 89% of his communications.

Both the speech and language therapist (SLT) and his parents reported that after the trial period, Jason began to use the SGD very much ‘like a toy.’ For example, a note made on the parental survey confirmed that Jason liked to place a card on the device to hear the same word emitted in a repeated fashion. Similarly, the LAM data indicated that on three separate occasions, the word ‘yellow’ was uttered up to 46 times in succession.

When the school was visited by the researcher at week 4, Jason had reverted to using PECS and was not interested in the SGD. The device was left in his classroom for the remainder of the study in case he changed his mind but he did not. During the second visit at week 8, his mother described Jason’s rejection of the SGD as ‘understandable’ as it was ‘very heavy to carry around’ and that he often appeared ‘confused’ by the number of picture cards made available to him on the storage pages of the device. His mother also felt that because Jason’s older brother did not have a SGD but instead communicated via PECS that it was ‘easier for Jason to stick with what he saw at home.

Analysis

It is interesting that Jason was reported to have used the SGD as a toy rather than as a means of functional communication, but it is not totally surprising. Autism is a spectrum disorder which implies huge differences among these children [19]. Previous case study evaluations of therapeutic/educational interventions for the child with LFA suggest that for some children the simple cause and effect of certain technologies such as the AIBO dog or robotic dolls serve to encourage ‘tactile and playful explorations’ which in turn lead to ‘the development of basic imitation’ and ‘communication with other people’ [20]. For other children however it is sometimes noted that these technologies are distracting and serve only as cause-and-effect toys [20]. This latter observation appears to have been the case for Jason.

Another element underpinning Jason’s acceptance or rejection of the device so quickly may have been its physical size and weight. The SGD used in this study measures at 12.9 inches x 7 inches x 3.5 inches and with its batteries it weighs 4.7 lbs (https://www. loganproxtalker.com). A recent review of different SGDs and their design suggest that children often reject ones that are considered ‘cumbersome’ [21]. It is further suggested that the larger devices (as opposed to the iphone or the android phones with speech activated features) often serve to ‘stigmatise’ the user [21]. These barriers may have led Jason to revert back to the more discreet AAC model such as sign language or PECs [22].

Finally, the child with autism can show difficulties generalising behaviours learned in one domain (i.e., school) to another (i.e., home) [23,24]. Because the introduction, training, and trial periods with the SGDs were conducted in the school domain, a schedule of reinforcements for using the device at home would likely have been useful to encourage Jason’s use of the device in the home.

All-in-all a ‘bottom-up’ approach to introducing AAC interventions would potentially have been best here. In other words, it would have been more advantageous for this child had the communication device allocated to him been better matched to his current level of needs and abilities. Something smaller, more portable, and easier to use across several domains such as the iphone with a ‘Grace app’ (https://www.graceapp.com) may have worked better here. A ‘clinical feature matching’ approach is one also endorsed by Blischak and Shane et al. [21]. In addition, it is well established that when the preferences of the child are not taken into account during AAC decision-making, partial or complete rejection of the allocated AAC can occur [3,21]. This appears to be the reason Jason abandoned this SGD.

JAKE was seven years old at the time of testing and he had attended school with an autism-specific educational focus since the age of five years. He did not talk and refused all eye contact. Reports held at his school indicated that he did not understand social interactions, he often secluded himself from other people, and even his mother found it difficult to interact with him. He had not adapted to PECS and while he used up to ten signs a day to make himself understood, most of these comprised tugging at an adult’s sleeve or using eye gaze to communicate his needs.

Prior to conducting the semi-longitudinal study, Jake’s mother described the situation as ‘very difficult’ and said that all attempts to get Jake to communicate via PECS had failed to date. His mother was somewhat sceptical about the SGD as a communication tool for her son, and although she consented to his participation, she said she doubted he would ‘go anywhere near it.’

Teaching staff indicated that while often appearing ‘lost in his own world’, that when engaged with directly and given clear instructions, Jake was very cooperative and ‘compliant.’ Accompanied by his teacher, Jake participated in the introduction period with the SGD. He also used the device over the 14-daytrial period, however during this time he only made 9 single-word communication events.

Jake rejected the device at week 5. In this period, his communication events, ranged from 4-80 per week, and totalled 131 with 87% of these made during school day. The LAM data revealed that 84% of all his communicative events were made in the first two weeks, and these were conducted via the male digitised speech output option. During this period, Jake used the device primarily to request food and snacks by placing one single-meaning picture card on a button at a time. The parental survey confirmed that Jake had reverted to using pre-linguistic communications such as pulling at adult’s sleeves and pointing by week 5.

Analysis

Firstly, it is informative that for a child who had not adopted to using PECS or more conventional sign language that he could and did communicate functionally via the SGD. He used it to make requests, and for a child who does not appear to be very socially interactive either in the home or school, this is an encouraging finding. Secondly, it is interesting that almost 90% of these communications occurred within the hours he attended school. It is possible to infer that this child, who apparently could and would engage with others when asked to, only used the SGD when he was asked/prompted to. Like all young children-the child with autism learning a new skill will benefit from frequent practice and immediate reinforcing feedback [24,25]. However, while the typically developing (TD) child may take their cues about learning a new skill (i.e., tying their shoelace) from watching others demonstrate the task or via spoken instructions, the child with autism may need a prompt, or an extra stimulus introduced to a situation (e.g., a hand-over-hand instruction on tying laces) that elicits the desired response from the child [23,24]. For many children with autism, prompts need to be continual and only faded out over time. In a few instances, a child with autism can become what is known as ‘prompt-dependent.’

Jake appears to have required a certain amount of prompting to encourage his participation in class and to engage with testing. This suggests that he may possibly have benefited from greater instructional procedures throughout the first few weeks of his use of the SGD rather than adapting the more ecological approach adapted here. Further support for this argument comes from a recent review of SGD use by children with autism which found that these devices were often abandoned if the instructional approach incorporated at the start of the intervention was insufficient or when generalisation and maintenance strategies were not taught to the child using the device [3]. Future research would benefit from not assuming a naturalistic approach, such as the one adopted here, will work for all children with LFA.

MARK was fifteen years old and had been attending school since he was eight. Prior to this he was home-educated via Applied Behaviour Analysis (ABA) methods. Mark engaged in echolalia (e.g., the immediate or delayed repetition of words or phrases he had heard), with a verbal repertoire of approximately five words. He was communicating with PECS at level 4 which meant that he could build two-part sentences to communicate his needs.

The speech and language therapist (SLT) at Mark’s school felt that this adolescent was a ‘prime example of a prompt-dependent child.’ The SLT indicated that Mark was so reliant on a schedule of prompts and reinforcers that he never acted or communicated spontaneously. He also remarked that Mark was capable of much more than one might imagine on first meeting him.

Mark participated in the introduction to the SGD and over the 14-day trial period, he made 192 communications. All of these communications were two-part messages such as ‘I want— chocolate’ or ‘I like—crisps’ suggesting he could use this form of AAC as functionally as he used PECS.

Mark abandoned the device after week 5 after a total of 699 communication events ranging from 1-248 per week. The LAM data shows that 40% of these communications took place in the first two weeks after the trial period and that Mark used the digitised speech output on his device 88% of the time. The device was used primarily to request food items.

Analysis

Mark was one of the older participants in this study. His CARS report indicated a rather severe form of autism, and his SLT suggested that he never initiated behaviours or communication events with others, preferring instead to respond only to requests which were always reinforced. Yet, his performance on the five tests conducted here suggest that he could stay on task, understand what was expected of him, and score well above chance on tasks that assessed the declarative memory system. Moreover, his use of the device over the five weeks was relatively consistent.

Given that this study was one where adults such as teachers and parents were specifically asked not to prompt the use of the device by the child, rather, the interest was in whether a recording of self-voice on the device would reinforce the child’s desire to communicate more often, it is possible to speculate that Mark could and did use the device independently over the five weeks.

So why was it rejected at week 5? One possible reason is that over the course of the study, just 39 of the 81 words and phrases stored on the SGD were recorded in Mark’s own voice. Teachers reported a lack of time to record the full library of words, and an incomplete parental report suggests time was also an issue in the home. The hypothesis of van Santen and Black [1] is that a SGD that sounds more like the child will enhance the frequency and quality of communication. In addition, it is suggested that when the user’s preferences are not valued during AAC decision making, partial or complete abandonment of AAC in the home and school settings may result [26]. Given that this child could recognise his own voice at test, he may have valued the device more if the library of words recorded in his own voice had been extended. Future research would benefit from providing teachers and caregivers more supports around creating the library of words recorded in the child’s voice.

JOHN was an eight year old who was attending school since the age of five but was only in his current school for the past eight months. He was building two-part sentences using PECS and was using up to five functional words a day (e.g., yes, no, want, home, and Game-Boy). His teachers reported that over the past six months, his focus and attention only lasted a very short time (i.e., a maximum of five minutes) and that he could be aggressive to others and cause self-injury. His parental survey informed us that John was a foster-child who was only with his foster family for the past 8 months and that they were concerned he was unhappy in his new school.

Unfortunately, John abandoned the device after week 5. However, his communication events totalled at 5987 and ranged from 111-260 per week. The LAM data showed that 43% of his communications were conducted in the first two weeks of the study. He used the digitised voice for 73% of all his communications and he used the device primarily to request items such as books, games, and puzzles.

A parental report at Week 4 indicated that John became unwell in early March and that he did not return to school (where the device was left) until the last week in March. This time frame corresponds with John’s initial abandonment of the device. It is very likely that the ‘break’ of almost three weeks irreparably interrupted his focus and attention toward the device. Children with autism frequently require routine for slow, incremental learning [23,24]. If this routine is broken, the child may take a while to re-adjust. This may be a factor in why this child abandoned the device. Alternatively, given the degree of change in his personal life, the introduction of a new AAC may just have occurred at the wrong time.

BOB was 15 years old at the time of this study. He was close in age to Mark; they came from the same village, and had attended the same home-tutor before they both began school at the age of eight years. This child rarely interacted on his own initiative and generally ignored other children around him in the classroom and the playground.

It was possible to record all 81 words comprising the SGD vocabulary in Bob’s voice in less than two hours as he would repeat any word he was asked to. His parents commented that over the 14-day-trial period with the device that Bob reached out to his father as if to request him to ‘join in’ placing SMP cards on the buttons of the SGD. His mother commented that this type of behaviour was ‘remarkable’ as he was typically a very withdrawn child. Despite the enthusiasm of his parents, and Bob’s own initial excitement about the SGD, he rejected the device by week 6. He had made a total of 1633 communication events ranging from 76- 707 per week. Bob conducted up to 47% of his communications with this device in the first two weeks and he used the male digitised speech output option for 89% of the time.

Analysis

Of the 11 children recruited for this study, Bob had the lowest NVMA and the lowest IQ scores (Table 1). Furthermore Bob, like many children with autism, had a preference for a predictable, structured and safe environment. He seemed to like being ‘in control’ of situations, only interacting on his terms and with whom he chose. He was very used to an Applied Behaviour Analysis approach up to the age of five years, and within school, he was using a system called TEACCH (Treatment and Education of Autistic and related Communication handicapped Children; Watson, Lord, Schaffer, and Schopler) [27]. Both these approaches emphasis structure and use prompts and rewards to elicit appropriate behaviours [23]. Accordingly, he should have engaged well with a piece of technology that functioned in a constant and predictable manner. The finding that he did not can implies that he did not ‘prefer’ to use this voice-type on his SGD when communicating.

Summary

Combined, these findings suggest that these five children exhibited an initial interest in, and ability to use, a speech generated device. This finding is consistent with previous research investigating AAC and the child with autism [3,8,16]. However, the results also suggest that after a relatively short time frame, the ‘dazzle’ of this new communication tool may wear off, a finding which resonates with the work of Shane, Laubscher, Schlosser, Flynn, Sorce, and Abramson [21]. Significantly, the data demonstrates a clear preference for a digitised speech output option by these five children. This important finding is consistent with previous studies showing a lack of preference for the sounds of natural speech as opposed to electronically produced speech in young children with autism spectrum disorder [28,29].

Section 2

The children who continued to use the SGD

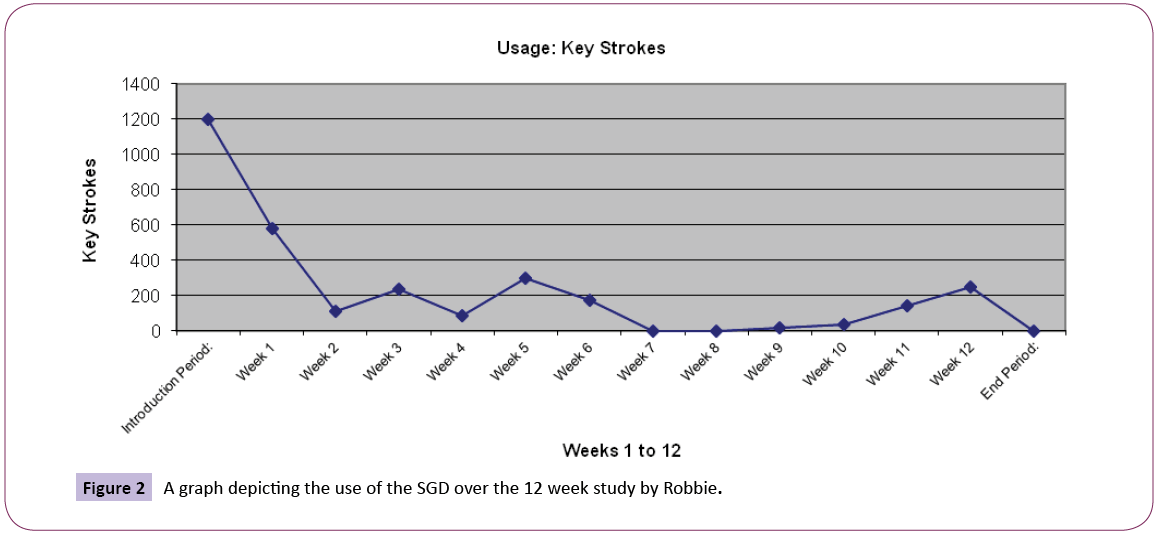

This meant that six children continued to use the SGD between week 6 and week 12. Descriptive data for these children (including their scores on five empirical studies conducted prior to the semi-longitudinal study) are shown in Table 1. Each of the six children will be discussed individually beginning with Sandra who communicated via the self-voice speech output option the least amount of all participants and ending with Connie, the child who chose to use this speech output on her SGD the most during this study . A brief synopsis of their performance on the five empirical tests conducted thus far will be outlined followed by the findings from the LAM data and sources such as the parental reports or discussions with their teachers or clinicians. A general discussion of these findings will be provided in the Discussion which follows this section

SANDRA

SANDRA was an only child with diagnosis of moderate to severe autism. She communicated primarily through nonverbal means and used communication solely for behavioural regulation. She initiated requests by reaching for the hand of her communication partner (e.g., parent or teacher) and placing it on the desired object. When cued, she used an approximation of the ‘more’ sign when holding the hand of the communication partner along with a verbal production of /m/. She had approximately six functional words (i.e., mamma, papa, no, yes, go away, and doggie) but was inclined to use any of these words less than four times in any given day.

Sandra knew about 10 approximate signs when asked to label objects or people in the classrooms, but these were not used in a communicative fashion. Protests were demonstrated most often through pushing hands. She played functionally with cause-andeffect toys when seated and used eye gaze appropriately during this type of play, but otherwise eye gaze was absent. Sandra often appeared to be non-engaged and responded inconsistently to her name.

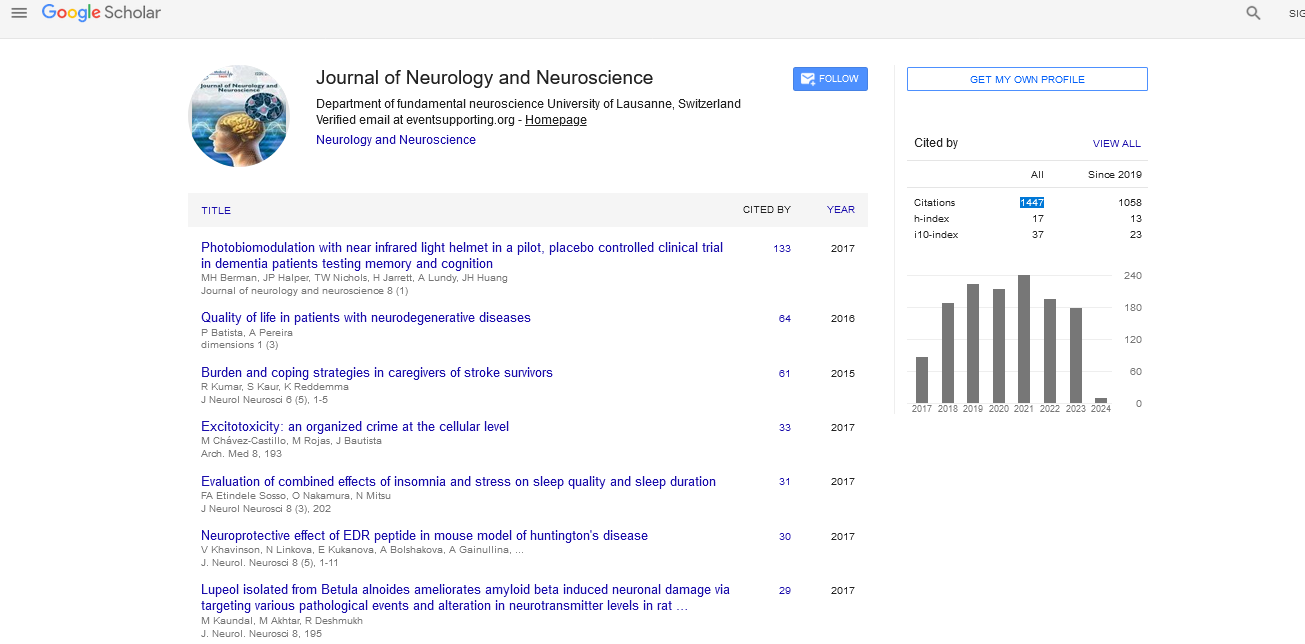

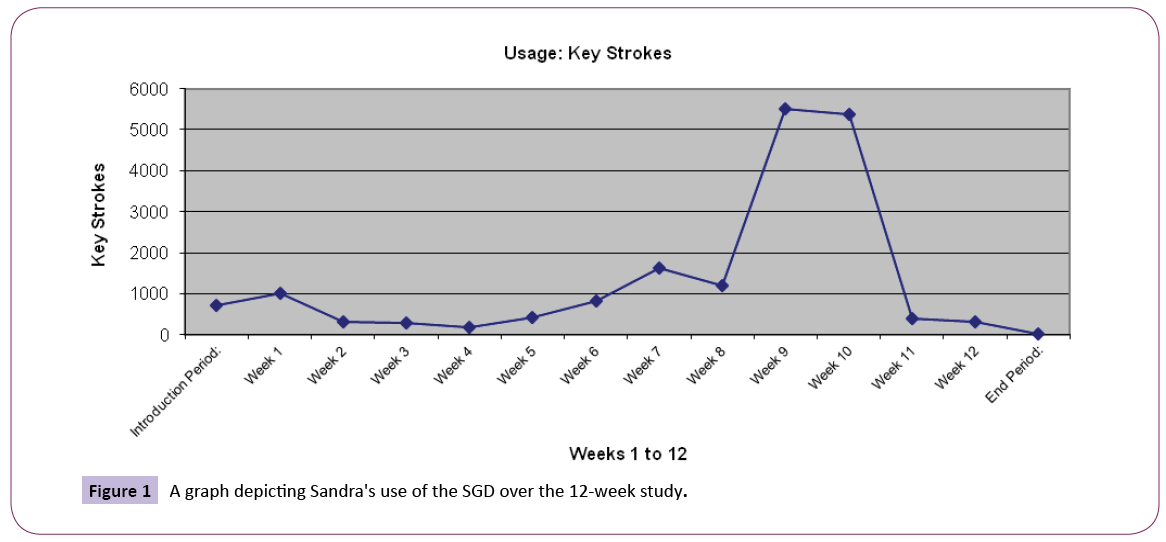

This child used the SGD over the full twelve weeks of this study. Over this time her communication events totalled at 18, 173, ranging from 175- 5498 per week making her the most avid SGD user of the 11 children. We can also see that 2942 (16%) of this participant’s communications related to requests for food (e.g., apple, cereal, chocolate), 2148 (12%) for items (e.g., computer, trampolines, playground) and 1667 (9%) and for access to places (e.g., outside, home). Sandra used the SGD more in the evenings (42.45%) than in the afternoons (29%) or mornings (27%) implying she liked to communicate with the SGD at home. In addition, Sandra used the SGD most during weeks 9 and 10. She used the self-voice speech output option for less than 3% of all her communications over the 12 weeks.

Analysis

The first point of analysis here has to be the reason why self-voice was used so little by the child who made the highest number of communication events via the SGD over the course of this study. Was it that she did not prefer this voice type, was it that the voice was unrecognisable to her, or was there another factor at play in this scenario? (Table 4).

| Child: |

Sandra |

Gender: |

Female |

Age: |

129 months |

| School: |

|

School Terms: |

3 |

NVMA: |

71 months |

| |

|

|

|

|

|

| Time of Day: |

Mornings |

Afternoons |

Evenings |

|

Total |

| Number of uses: |

5066 |

5413 |

7728 |

|

18207** |

| Percentage: |

27.82% |

29.73% |

42.45% |

|

100.00% |

| Voice type: |

Male (1) |

Female (2) |

Self (3) |

*Self over PreR (4) |

Total |

| Times used: |

1 |

17193 |

449 |

530 |

18173** |

| Percentage: |

0.01% |

94.61% |

2.47% |

2.92% |

100.00% |

*Self over PreR refers to original picture cards that were recorded over with a replacement word (e.g., ’pooter‘ for computer).

** The sum 18207 differs from the sum of 18173 as the former includes data from the introductory period.

Table 4: A report from the LAM data on the use of voice type by Sandra.

In terms of recognition, we have argued throughout that this cognitive capacity is accomplished on the basis of both familiarity and recollection. It may be that an event sparks recognition on the grounds of pure familiarity [30], but in the absence of contextual information relaying the more meaningful aspect of that event to mind, full recognition will not be achieved [6,30,31]. This is often the case in natural aging, or in Alzheimer’s: was it the case for Sandra?

It is possible that recollection may be an issue for Sandra [32]. For instance, previous studies using the Remember/Know RK procedure have found that individuals with autism often exhibit far more ‘know’ than ‘remember’ responses, suggesting a greater reliance of familiarity than recollection [33]. Perhaps therefore, Sandra recognised the self-voice speech output option in the SGD as familiar, but unable to recollect the source of the voice as that of her own, she failed to fully identify with it, and chose instead the female digitised voice.

Alternatively, Sandra simply preferred the digitised female voice over that of the natural voice recording. Support for this inference would come from Kuhl et al., [29] and Klin [28] who both found a lack of preference by children with autism for the sounds of natural speech as opposed to electronically produced speech when the studies were experimental in their design. Sandra did use the SGD for the full 12 weeks of the study however, with a clear peak in this usage between weeks 8-11 (Figure 1).

The LAM data showed that during this time the bulk of these communication events not only occurred in the late evening, but that they comprised single-word events that made very little meaning (Table 5).

| Date |

Time |

Button |

Code |

Words |

| 31/03/2012 |

19:25:29 |

SM2 |

0x04001449 |

Afraid |

| 31/03/2012 |

19:25:30 |

SM3 |

0x04001A30 |

Angry |

| 31/03/2012 |

19:26:21 |

SM4 |

0x04003B63 |

Animal |

| 31/03/2012 |

19:26:22 |

SM1 |

0x04001E16 |

Apple |

| 31/03/2012 |

19:26:23 |

SM2 |

0x0400351A |

Arm |

| 01/04/2012 |

20:33:04 |

SM1 |

0x04004C28 |

Dad |

| 01/04/2012 |

20:33:06 |

SM2 |

0x04003AB3 |

Dog |

| 01/04/2012 |

20:33:09 |

SM3 |

0x04004DF8 |

Friend |

| 01/04/2012 |

20:33:11 |

SM4 |

0x04004464 |

Game |

Table 5: A report from the LAM data on Sandra's use of the SGD.

The SLT at Sandra’s school was contacted and confirmed that in school, the device ‘had not worked’ for Sandra in that she rarely if ever created a sentence with the device, preferring instead to ‘play’ with it r epeatedly to ‘hear the voice’.

Of interest, the parental report indicated that in the evenings, Sandra would remove every picture card from her device and replace them one-by-one after pressing them on any of the five buttons to hear the digitised ‘voice’. Sandra would repeat this procedure repeatedly in a two-hour period before bedtime, which explains, at least in part, the high number of single-word events.

On completion of the study, the SLT at Sandra’s school made contact to say that Sandra had increased her verbal repertoire from 6 words to 24 words since using the SGD. Furthermore, the ‘new’ vocabulary reflected words that were programmed on the SGD such as ‘shoes’ ‘pain’ and ‘sorry’. The SLT and her mother deduced that she must have been using the SGD to familiarise herself with new words when she was activating the device each night. This finding would be in line with previous findings from a small number of empirical studies which also reported improvement in speech skills after interventions via augmentative and alternative communication devices [34,35].

The findings lend support to the suggestion that familiarity increases with stimulus repetition, as it builds on perceptual representations which may initially have been implicit, and is important for slow, incremental learning [32, 36,37].

Overall therefore, while the self-voice option does not seem to have been preferred by Sandra when using the SGD, and while it seemed that she was utilising the device more as a cause-and-effect toy than a communication tool, Future research would benefit from testing the hypothesis that this mode of voice-operated communication device can enhance the communicative ability of certain children with autism [1,34,35].

ROBBIE

ROBBIE was fourteen at the time of this study. He presented with a CARS score of 36 (moderate-severe) and his performance on the BPVS failed to produce a score. He had attended this autism-specific school for over six years, and prior to this, he was tutored at home via Applied Behaviour Analysis (ABA) methods. Robbie could repeat almost any word that he heard, but had no functional speech. The SLT reported that this child used a combination of manual signs and PECS to make himself understood. Robbie would go to his communication board, remove a single picture, go to an adult and place the picture in the adults’ hand, indicating he was communicating with PECS at phase 2 level [5]. Robbie never communicated spontaneously using PECS at home, preferring instead to rely on pre-linguistic gestures such as pointing or tugging at an adult’s sleeve. His use of PECS in school hours was at a rate of no more than 10 per day.

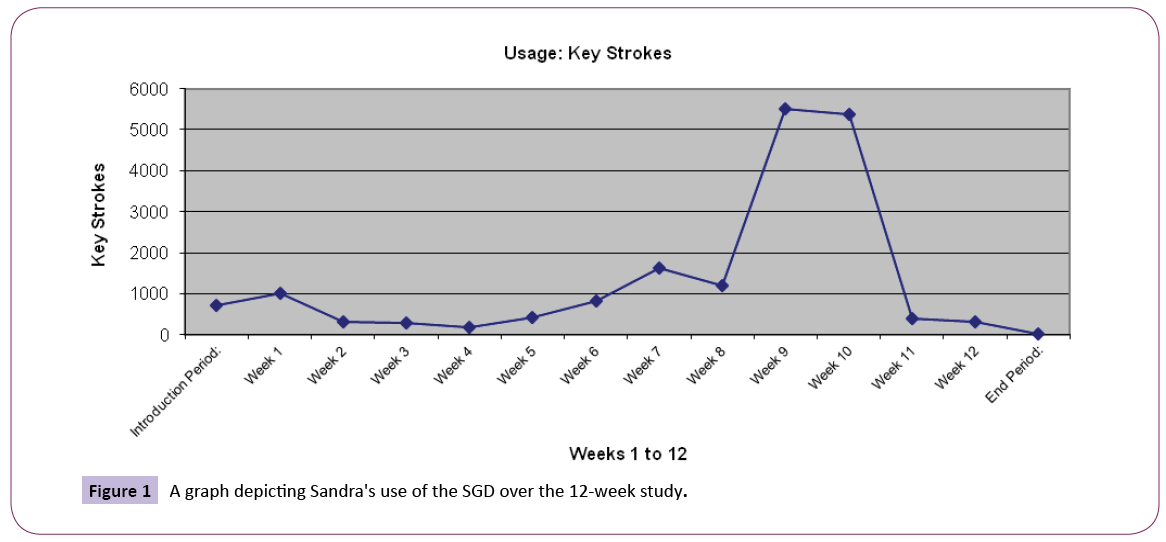

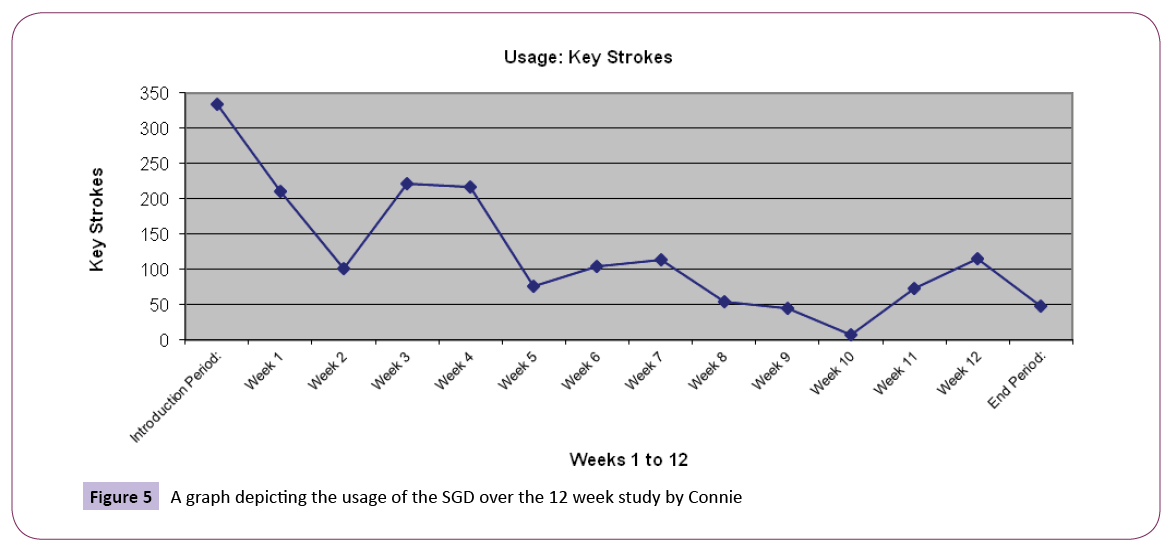

Robbie used the SGD for 11 of the 12 weeks of the study His communicative events ranged from 0 to 580 per week with an overall total of 3133. It was shown that 35% (1056) of his communications related to requests for items (e.g., T.V., computer, puzzle, ball), 548 (18%) for food (e.g., ice-cream, sandwich, apple) and 370 (12%) for people (e.g., mum, dad, teacher) (Figure 2).

Figure 2: A graph depicting the use of the SGD over the 12 week study by Robbie.

The data revealed that while Robbie made a number of unrelated one-word events (Robbie repeated the word ‘computer’ over 120 times in a twenty minute period), he also used the device to build a number of two-and-three-part sentences (Table 6). Finally, it was possible to see that Robbie communicated via the male digitised speech output option 77% of the time.

| Date |

Time |

Button |

Code |

Voice |

| 27/01/2012 |

09:25:27 |

SM1 |

0x4400011a |

I want |

| 27/01/2012 |

09:25:29 |

SM2 |

0x440004ec |

To eat |

| 27/01/2012 |

09:25:30 |

SM3 |

0x44002199 |

Cereal |

| 27/01/2012 |

09:26:21 |

SM1 |

0x44000886 |

I like |

| 27/01/2012 |

09:26:22 |

SM2 |

0x440049e3 |

More |

| 27/01/2012 |

09:26:23 |

SM3 |

0x4400479a |

Thank you |

| 14/03/2012 |

09:33:04 |

SM1 |

0x440017b7 |

Too loud |

| 14/03/2012 |

09:33:06 |

SM2 |

0x0000128b |

doctor |

| 14/03/2012 |

09:33:09 |

SM3 |

0x0000084c |

Calpol |

| 14/03/2012 |

09:33:11 |

SM4 |

0x000007e5 |

ear |

0x44 = male digitised voice. 0x00 = self- voice

Table 6: An example of sentence building on the SGD: Robbie.

Analysis

Robbie should have been able to recognise the voice on the device as that of his own as he had acknowledged it at testing and induction. However, there was a three month gap between training, induction and the allocation of the personalised SGDs, which could mean that any recognition of self-voice at testing was simply not maintained over that time [38]. In other words, the recognition he showed for self-voice may only have focused on Robbie’s ability to ‘fast map’ (or the ability to map the sound of his own recorded voice to memory after one or two exposures) which was then assessed immediately via a recognition test. We did not consider the effect of the child’s ability to remember the sound of their own recorded voice over an extended time period.

It was recently suggested that while the child with autism may initially recognise the phonological forms of new words or sounds, these initial representations are fragile and may not be consolidated in memory in the long term [38]. When tested immediately following exposure to new words ‘the ease at which children with ASD’ learn and recognise the new words relative to age and ability matched TD children was noted [38]. However, despite a poorer performance on this task by age and ability matched TD children, when assessed after a time delay, the TD children demonstrated greater recall, ‘despite having no further exposure to the novel words’ [38]. It was suggested that the TD child had assimilated and encoded the novel words into memory, transferring the newly formed memories ‘from medial temporal lobe structures, including the hippocampus, to neocortical structures,’ making the new memories robust over time [38]. However, the children with autism did not show any such consolidation effect. It was suggested that that there is ‘considerable evidence that neural connectivity is disrupted in ASD, which may interfere with the transfer and integration of newly learned information with existing knowledge’ [38]. While investigating the recognition of self-voice rather than new word learning, the results of the study by Norbury might imply that Robbie may not have maintained the auditory representations of self-voice in the three month gap between testing and SGD allocation.

As such, while highly speculative, it is possible to infer that in the absence of, or with only poorly consolidated memory for selfvoice over time, Robbie may have failed to ‘recollect’ the natural voice on the SGD as that of his own, which in turn may have affected his preference for that voice-type. This would prove an interesting topic for future research.

NITA

Nita was thirteen years old at the time of testing and she presented with a CARS rating of 38 (moderate-severe) and an IQ of 64 (Table 1) and was the only non-Irish child in the study, having moved from India with her family six years previously. Her parents spoke a mixture of English and Urdu and while Nita could say 3-4 words in English, she preferred to utter these words in her native tongue.

Nita’s father described his daughter as ‘very rigid’ in her behaviours. He said she ‘had to sit in exactly the same spot on the couch’ every evening and she had to stir her tea ‘exactly seven times’ prior to drinking it. Her father described all her communication as ‘sporadic’ and ‘sparse’ and wrote that Nita veered between sign language and PECS in the home. Nita had had access to a Go Talk 4+ (www.assistireland.ie) voice operated communication aid (VOCA) in the past, but her parents and teachers reported that this had not worked for Nita and that she preferred to use sign and/or PECS to make herself understood.

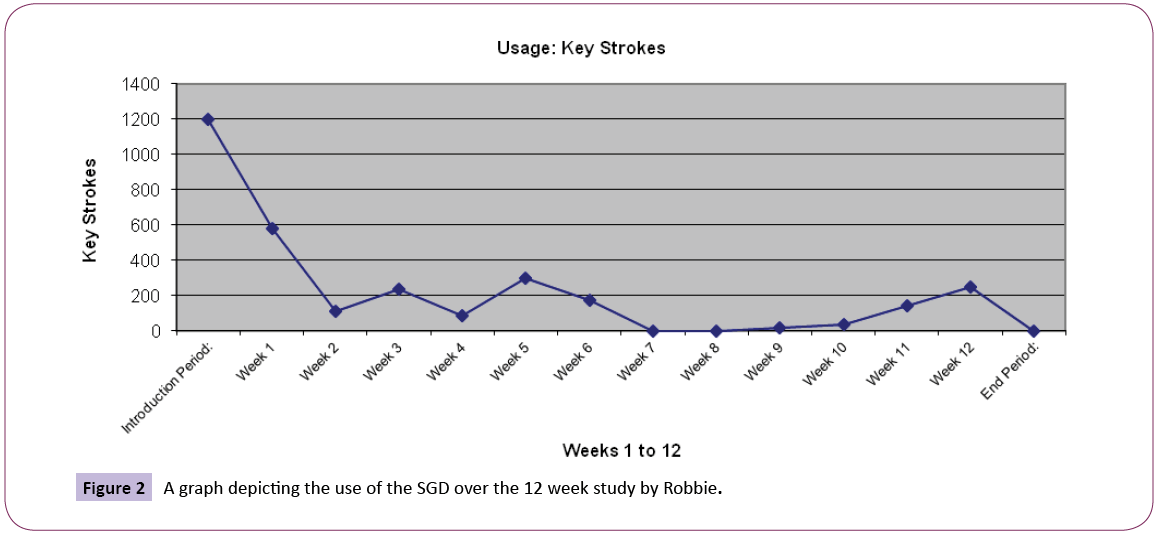

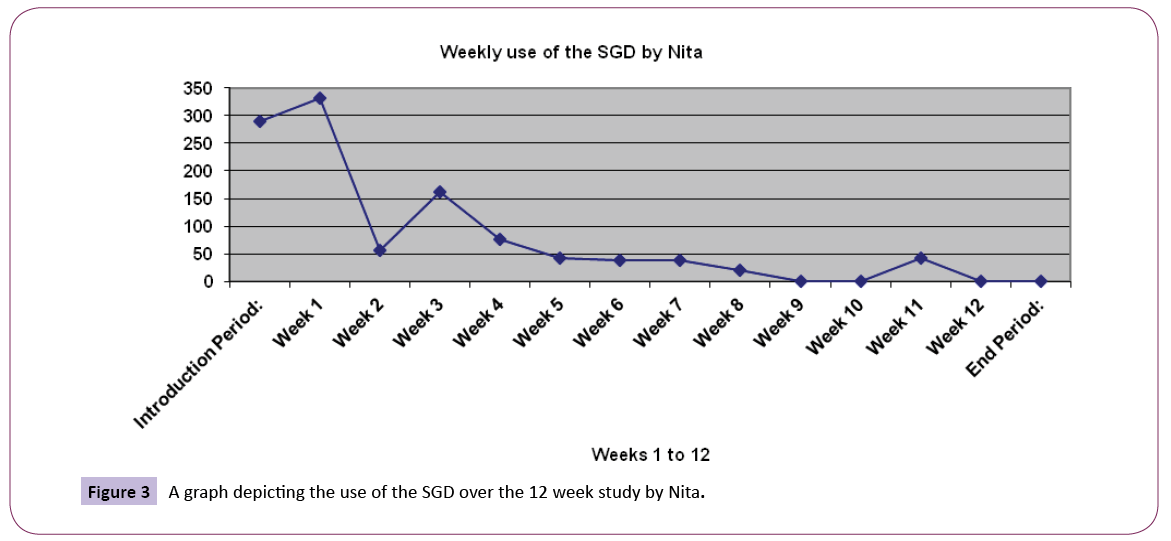

Nita used the SGD over 10 of the 12 weeks (missing weeks 9 and 10). She used her device most (56.3%) during the first two weeks of the study, thereafter tapering off to between 1-57 communication events per week. Most of Nita’s communications related to requests for items (19%) and for access to places (15%) such as ‘home’, the ‘car’ and ‘outside.’ Over this time her communication events totalled at 1,101, ranging from 0- 332 per week with a mean average of 1.5 utterances per hour which is relatively consistent with previous research collecting communications samples from children with ASD (Figure 3).

Figure 3: A graph depicting the use of the SGD over the 12 week study by Nita.

Analysis

Before surmising that recollection was impaired in this child, making self-voice less recognisable and thus not preferred as the main speech output option, it is necessary to state that the voice recorded onto the SGD was not Nita’s but that of her aunt. Nita was unwilling to echo any of the 81 words to be recorded, and while content to use the SGD, did not wish to cooperate with this part of the proceedings. It was not possible to find a child of similar age, gender, and ethnicity, at the time, so Nita’s aunt (who was ten years her senior), agreed to provide her voice for recording purposes.

Obviously, using the voice of an adult as opposed to an adolescent is problematic when it comes to discussing the child’s preference for self-voice on her SGD. However, it could be argued that in line with the hypothesis of van Santen and Black rather than digitised speech, using a personalised natural voice as opposed to either digitised or synthesised speech will ‘will psychologically reinforce powerful motivational factors and a sense of owness for communication so that the frequency and richness of AAC use, and its acceptance by family members and friends, will be enhanced.’ In any event, this was not the case here. Nita maintained a communication style that was relatively ‘sparse’ and ‘sporadic’ and one which veered between PECs and sign during her time with the SGD.

Despite showing no overt preference for a natural recorded voice on the SGD, Nita did use the device to communicate for the duration of the study. It is difficult to ascertain any preference for using the SGD over that of any other AAC available to her, as the parental report indicated that she ‘veered between PECS, sign and the SGD.’ It is clear that her use of the SGD was as fairly minimal however, with approximately one-to-two utterances per hour.

There are two possible ways of analysing Nita’s use of the SGD as a communication tool. We could conceptualise her low communication rate as a function of her cognitive level and her degree of autism. From this, we could infer that because of her diagnosis and its associated impairment of social interaction that Nita will always display a lack of spontaneous seeking to communicate with others. Via this lens, allocating any form of AAC will certainly provide the child with a means of expressing oneself, but rather than serving as a gateway to more frequent and richer AAC use, the SGD would potentially only ever be used ‘to request something or to attract attention to oneself’.

Alternatively, we could look beyond the diagnosis and consider the child’s social world. For instance, it is suggested that from the moment a child receives a diagnosis, it changes the environment in which the child develops. People in the child’s life are often more cautious of allowing their diagnosed infant to explore objects with their mouths or to crawl of walk freely, most likely because of a natural fear of accidents in vulnerable children. Nonetheless, this results in a less richly explored environment by the developing child.

In terms of communication, it is noted that when TD toddlers begin to name things, their parents allow them to make grammatical errors and overgeneralisations but not so when the child has a diagnosis. Karmiloff-Smith et al., suggest that parents are often afraid that their child with lower intelligence will never learn the correct term if the child is allowed to over-generalise in the first instance. However, initial over-generalisations in the TD child is said to encourage category formation, for instance, by calling different animals cats the child start to create an implicit animal category (e.g., Quin and Rosch). It is well established that category formation is impaired in several neurodevelopment disorders, and Karmiloff-Smith et al. (2012: e2) suggests that the often ‘unconscious assumptions about what atypical children can and cannot learn may unwittingly lead parents to provide less variation in linguistic input and in general, a less varied environment to explore.’

Nita shows evidence of recognition at least on the basis of familiarity [30]. She successfully matched the voices of people from her school to their corresponding photographs at tests, and was able to recognise a recording of her own voice with and without visual prompts, which suggests some form of intact ability to retrieve contextual information from memory. What is being suggested here is that Nita may not communicate very much via any form of AAC, but this may be less as a result of her cognitive capacity or the severity of her disorder per se, and more to do with the ways we all contribute to her environment, often unwittingly. Perhaps greater interaction with the child with autism in general, and Nita in particular would encourage her to reciprocate.

FRANCIS

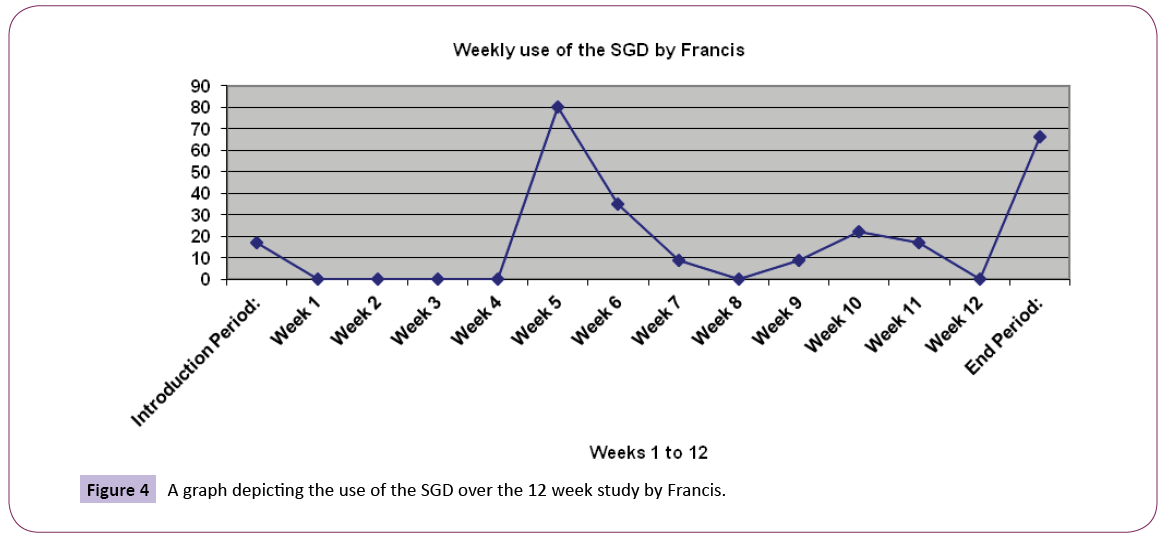

Francis was ten years old at time of testing and he presented with a CARS score of 36 (moderate-severe) with an IQ of 52 (Table 1). The teacher reported that Francis would go to his communication board, remove a single picture, return to a caregiver and place the picture in the caregiver’s hand but that he preferred to use gestures and manual signs to gain attention. Data from the parental report confirmed the teachers report and added that ‘Francis will tug at sleeves or grab my hand to point it in the direction of what he wants.’ Accordingly, Francis could communicate using single-meaning pictures but he primarily relied on pre-linguistic behaviours such as pointing, reaching, eye-gazing and other facial expressions. Francis used a maximum of two words per day to communicate (i.e., yes and no) and this child had no previous experience with a speech generated communication device.

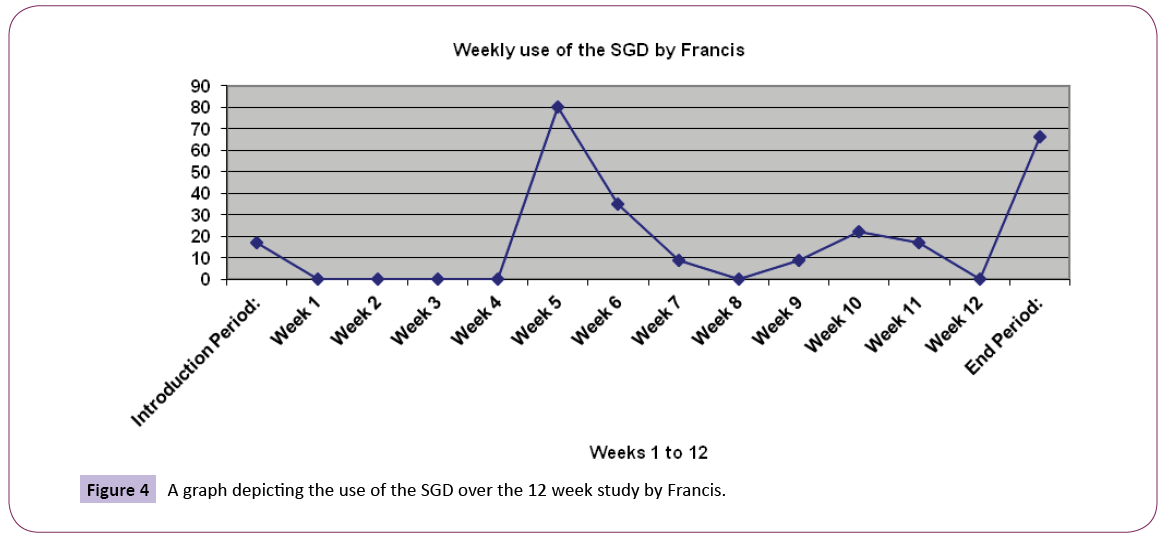

The LAM data shows that Francis used the SGD unevenly over the 12 weeks of the study (Figure 4) with his lowest usage during the first four weeks. Over this time his communications totalled 255 events, ranging from 0- 80 per week. This result suggests that of the six children who continued to use the device after week 6, Francis was the least frequent user of the SGD as a communication tool.

Figure 4: A graph depicting the use of the SGD over the 12 week study by Francis.

During this time most of his communications (19%) were requests for items such as the computer and games, and for access to places (8%). Francis was unusual insofar as he used the digitised female voice for 70% of his total communications via the SGD.

Analysis

There are three main points to discuss here, the first relates to the low rate of SGD usage by Francis, the second to the function of his communications, and the third to his choice of voice-type. In relation to the first point, prior to allocating the personalised SGD to Francis, baseline data of his current rate and mode of communication was obtained. It was noted that Francis could echo words after a speaker, but that he uttered no more than two words spontaneously most days, preferring instead to use prelinguistic gestures such as gesturing, pointing, facial expressions, and tugging at the sleeves of others to make his needs understood. Francis was at phase-two level in his use of PECS, and both his teachers and his parents stated that his communications via this mode was also less than three a day. Accordingly, an average of 80 communications per week (or 11 communications per day) is neither an increase nor a decrease in his typical rate of communication, nor is it out of line with the findings of Stone and Caro-Martinez, who found an average communication rate of 1.5 per day in children with autism during school hours.

In terms of the function of his communication, which was the second point under discussion, baseline data suggest that when Francis made contact with others prior to having the SGD, most of his requests were for access to activities or items, for instance, access to the school yard or to a favourite DVD. The findings from the LAM data suggest little change in the function of his communication as a result of using a voice-operated communication aid (VOCA). Both these observations imply that while Francis accepted the SGD as a communication tool, and while he was very capable of operating it, having a VOCA did not enhance the frequency or richness of his AAC use [2].

The third point relates to his use of the female voice as his preferred speech output option when using this SGD. One possible reason for this is that the SGD was damaged in some way leaving him no option but to use this voice. This was checked however, and it was possible to see that he did use the other two speech output options over the 12 weeks, just not to the same extent as he used the female voice (Table 7).

| Time of Day: |

Mornings (1) |

Afternoons (2) |

Evenings (3) |

|

Total |

| Number of uses: |

174 |

58 |

23 |

|

255* |

| Percentage: |

68.24% |

22.75% |

9.02% |

|

100.00% |

| Voice type: |

Male (1) |

Female (2) |

Self (3) |

Self over PreR (4) |

Total |

| Times used: |

1 |

169 |

69 |

0 |

239* |

| Percentage: |

0.42% |

70.71% |

28.87% |

0.00% |

100.00% |

*The totals reported here differ as the first includes data from the introductory period while the latter does not.

Table 7: A report from the LAM data on the use of voice-type by Francis.

There was no parental report for this child, and neither his teacher nor the SLT could offer any reason for his choice of voice type, sowe considered aspects of recognition memory instead. .

This ten-year old boy may have had some impairment at the point of retrieval from memory at the point of making a recognition judgement [6,30,31]. In line with Mandler, this could be reflective of the child’s ability to recognise well via familiarity, but less well when a search of contextual information was required. Jacoby, by contrast, might suggest that the issue with the child’s recollection is a reflection of how deeply Francis may have processed the information tobe- remembered at the time of encoding. And given that children with autism are characterised by a low level of social interest, it is likely that impaired recognition of faces and voices is a result of an initial lack of attention to people in the first instance (Burack, Enns, Stauder, Mottron, and Randolph).

Alternatively, there are strong suggestions that when visual cues or prompts are given to support retrieval, then recognition is accomplished in ASD. This finding resonates with the tasksupport hypothesis according to which ‘procedures that provide cues to the remembered material at test attenuate the memory difficulties experienced by individuals with ASD’ [33]. In the future, it would be advantageous to test the hypothesis that Francis used the female voice on the SGD primarily because while all three voices on the SGD were somewhat familiar to him, without some form of prompt or memory cue, he was unable to recollect which voice-type was his own.

Alternatively, Francis simply preferred the sound of the female digitised voice. I suggest this because he recognised his own voice on ten occasions on Study 5: A test of Self-Voice Recognition in LFA where no photographs were used as prompts, implying this child could recognise self voice, but chose not to use it when communicating via the SGD.

Perhaps self-voice ‘vexed’ him, as there was too great a discrepancy between what he thought it should sound like versus how it actually sounded. Or perhaps it was simply too difficult to recognise a voice that was hardly ever used by the child in the first instance. Either way, the data suggests that the use of a personalised SGD did not enhance the frequency or richness of his AAC use, and that he did not prefer to communicate via the self-voice speech output option.

JIM